Identity Crisis in the Digital Age: Using Science-Based Biometrics to Combat Malicious Generative AI

The potential applications of Artificial Intelligence (AI) are immense. AI aids us in everything from early cancer diagnoses to alleviating public administrative bureaucracy to making our working lives more productive. However, generative AI is also used for illicit purposes. It is being used to impersonate individuals and to create content to spread disinformation online. It is increasingly used to leverage identity fraud schemes.

Resilient identity verification is needed to ensure a remote individual’s identity, content and credentials are genuine. This involves binding an individual to their trusted government-issued ID.

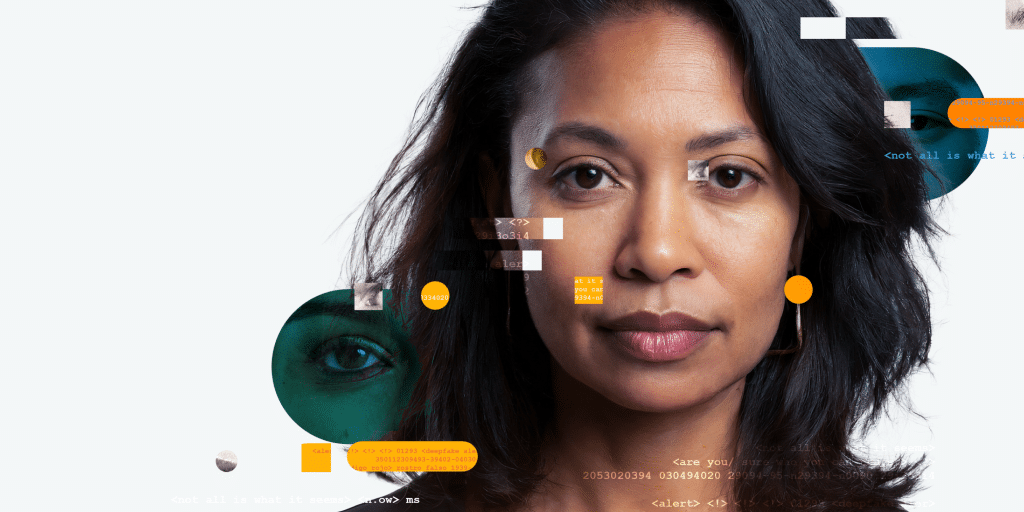

Identity Crisis in the Digital Age: Using Science-Based Biometrics to Combat Malicious Generative AI explores how biometric face verification is the most reliable and usable method to verify identity remotely. Yet, not all face verification is created equal. On-premise solutions risk reverse engineering, while Presentation Attack Detection (PAD) systems provide little defense against AI-generated attacks.

Technologies must deploy a one-time biometric to assure that a remote individual is not just real, but also genuinely present in real-time. This alone, however, is not sufficient to deliver resilience against the criminal aspects of generative AI. Vendors must take a science-based approach, operating active threat monitoring to gain insight to the threat landscape, respond zero-day vulnerabilities and share intelligence with the authorities.