June 23, 2022

Some of your social media contacts may have been generated with AI.

No, really – a recent study from Stanford Internet Observatory found that over 1000 deepfake profiles are lurking on LinkedIn… and that’s just the findings from one study on one social network. The study identified accounts that were created using entirely fictitious identities with false education and employment credentials.

This study highlights a few topics of concern:

What Are Deepfakes and How Are They Being Used on Social Media?

Deepfakes are synthetic videos or images created using AI-powered software. They are often used to misrepresent people by showing them saying and doing things that they didn’t say or do. Deepfakes have been used for pranks and entertainment, but also for more malicious purposes. Concerningly, the number of deepfake videos posted online is more than doubling year-on-year.

In the LinkedIn Stanford Internet Observatory study, deepfakes were used for commercial purposes. The deepfake accounts sent messages from a legitimate company in order to pitch their software to unsuspecting LinkedIn members – making the deepfakes a sales tool, essentially. This is beneficial for the company because creating new deepfake accounts can circumvent the LinkedIn message limit.

While this may seem relatively benign, it’s only the surface of a deeper issue. Social media accounts using computer-generated faces have committed more serious acts.

Other examples include:

- Deepfakes pushing political disinformation.

- Deepfakes harassing activists.

- Deepfakes scamming a CEO out of $243,000.

Part of what makes deepfakes such a dangerous, scalable threat is that people cannot spot deepfakes. Certainly, there can be giveaways: the positioning and color of eyes, inconsistencies around the hairline, and some visual strangeness.

Recent survey data from iProov found that 57% of global respondents believe they could tell the difference between a real video and a deepfake, which is up from 37% in 2019. But the truth is that sophisticated deepfakes can be truly indistinguishable to the human eye. To truly verify a deepfake, deep learning and computer vision technologies are required to analyze certain properties, such as how light reflects on real versus synthetic skin.

Social media companies have a duty to protect their users, advertisers and employees from these growing scalable threats. Luckily, there’s a solution.

How Can Identity Verification Establish Trust Online and on Social Media?

We’ve established that the various application of deepfakes by bad actors can be severe. But the real, long-term danger of deepfakes is the erosion of trust online. Video evidence will become less trustworthy, leading to plausible deniability: “that wasn’t me”, people might say, “it’s a deepfake!”

The deepfake threat all boils down to one question: how can you trust that someone is who they say they are online? The answer is digital identity verification. The most secure, convenient, and reliable means of identification is biometrics — particularly, facial biometric verification

Digital identity verification is already a part of our online lives. Everyday processes already require verification, such as:

- Setting up a bank account

- Registering for government support programs

- Verifying identity for travel

These examples require us to prove who we are in order to complete important tasks online. Yet social media is one sphere where identity verification is absent. Anyone — or anything, in the case of deepfakes and bots — can create an account on social media and apparently do what they want, without much fear of it being traced back to them.

One option for social media networks is to introduce accountability online through face verification, ensuring that someone making a social media account is who they say they are. Various companies in the online dating sector, such as Tinder, have turned to identity verification as we predicted in 2020 and 2021. What this looks like will depend on the network. Identity verification could be optional, granting the user an “identity verified” icon like on Tinder.

The Benefits of Identity Verification for Social Media Networks

- Reduced volume of spam, deepfakes, and bot accounts on social networks.

- Improved experience, reassurance and security for users – we’ll know we’re interacting with a verified human.

- Helps track and prevent serious abuse, fraud, and social engineering on social networks.

- Helps social media users feel safer online.

- Defends the reputation of social media networks, as they are seen to be taking action to protect users online.

How Can Liveness and Dynamic Liveness Combat Deepfakes and Help the Social Media Sector?

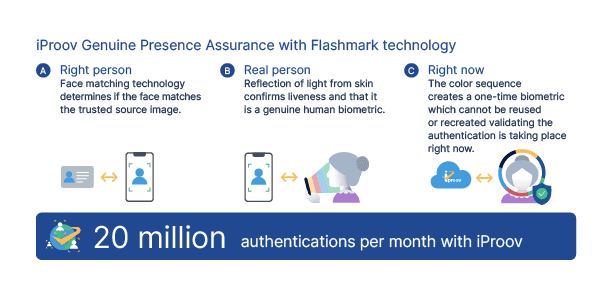

Deepfakes are a unique and challenging threat that requires a unique solution. That’s why iProov’s patented biometric technology, Dynamic Liveness, is designed to offer advanced protection against deepfakes and other synthetic or digital injected attacks.

If social media networks choose to implement technology to authenticate the identity of an online user and protect against deepfakes, they will need to ensure that they select the right technology. Liveness detection is an important part of the identity verification process and not all liveness is equal.

iProov’s Dynamic Liveness delivers all the benefits of liveness detection, providing greater accuracy on whether a user is the right person and a real person. But Dynamic Liveness also verifies that the user is authenticating right now, giving extra protection against the use of digital injected attacks that use deepfakes or other synthetic media.

The additional benefit of implementing Dynamic Liveness is that it can be used for ongoing authentication on social media. Whenever users return to use the app again, they present their face and prove they are the right person, a real person, and that they are authenticating right now. This means that nobody else can ever access that account, or send messages, or carry out any activity that they then insist they had not done.

The additional benefit of implementing Dynamic Liveness is that it can be used for ongoing authentication on social media. Whenever users return to use the app again, they present their face and prove they are the right person, a real person, and that they are authenticating right now. This means that nobody else can ever access that account, or send messages, or carry out any activity that they then insist they had not done.

iProov works on any device with a user-facing camera – it does not require specialist hardware for authentication, providing maximum accessibility and inclusivity for end-users. Additionally, GPA comes with iProov Security Operations Centre (iSOC)-powered active threat management. iSOC uses advanced machine-learning technology to monitor day-to-day operations and provide resilience against sophisticated emerging attacks.

We’re in an arms race between deepfake generators and deepfake detectors, and enterprises such as LinkedIn must prioritise their security measures and consider how they can protect their customers from attacks. The bottom line is that Dynamic Liveness technology protects against deepfakes by assuring that an online user is a real person actually authenticating right now.

Social Media, Deepfakes, and Digital Identity Verification: A Summary

- Deepfakes are being used on social networks, with social media sites being targeted for phishing (whereby people inadvertently disclose information, often because of manipulation).

- While some uses of deepfakes on social media are relatively benign, others are dangerous: political disinformation, harassment, and so on.

- Social media platforms must protect their users. Identity verification provides reassurance, safety and protection from harassment and disinformation.

- There are many ways in which identity verification could be implemented by social media networks. Identity verification could be optional, granting the user an “identity verified” icon like on Tinder.

- iProov’s Dynamic Liveness enables organizations to verify user digital identity, with protection against deepfakes and other synthetic media.

- This would enable a better social media user experience, increased trust, safety, and security for users. Additionally, introducing digital identity verification can help to defend the reputation of social media brands, as they are seen to be taking action to protect users online.

Find out more: The Deepfake Threat

Book your iProov demo or contact us.